Accepted papers

Authors:

Joao B Monteiro (Institut National de la Recherche Scientifique)*; Jahangir Alam (Computer Research Institute of Montreal (CRIM), Montreal (Quebec) Canada); Tiago H Falk (INRS-EMT)

Abstract:Time delay neural networks (TDNN) have become ubiquitous for voice biometrics and language recognition tasks relying on utterance-level speaker- or language-dependent representations. In this paper, we discuss directions to improve upon the conventional TDNN architecture to render it more generally applicable. More specifically, we explore the utility of performing pooling operations across different levels of the convolutional stack and further propose an approach to efficiently combine such set of representations. We show that the resulting models are more versatile, in the sense that a fixed architecture can be re-used across different tasks, and learned representations are more discriminative. Evaluations are performed across two settings: (1) two sub-tasks for spoofing attack detection, and (2) three sub-tasks for spoken language identification. Results show the proposed design yielding improvements over the original TDNN architecture, as well as other previously proposed methods.

Authors:

Di Jin (Amazon Alexa AI)*; Shuyang Gao (Amazon); Seokhwan Kim (Amazon Alexa AI); Yang Liu (Amazon, Alexa AI); Dilek Z Hakkani-Tur (Amazon Alexa AI)

Abstract:Most prior work on task-oriented dialogue systems is restricted to supporting domain APIs. However, users may have requests that are out of the scope of these APIs. This work focuses on identifying such user requests. Existing methods for this task mainly rely on fine-tuning pre-trained models on large annotated data. We propose a novel method, REDE, based on adaptive representation learning and density estimation. REDE can be applied to zero/few-shots cases, and quickly learn a high-performing detector that is comparable to the full-supervision setting with only a few shots by updating less than 3K parameters. We demonstrate REDE's competitive performance on DSTC9 Track 1 dataset and our newly collected test set.

Authors:

Di Jin (Amazon Alexa AI)*; Shuyang Gao (Amazon); Seokhwan Kim (Amazon Alexa AI); Yang Liu (Amazon, Alexa AI); Dilek Z Hakkani-Tur (Amazon Alexa AI)

Abstract:In many real-world settings, machine learning models need to identify user inputs that are out-of-domain (OOD) so as to avoid performing wrong actions. This work focuses on a challenging case of OOD detection, where no labels for in-domain data are accessible (e.g., no intent labels for the intent classification task). To this end, we propose a novel representation learning based method by combining unsupervised clustering and contrastive learning so that better data representations for OOD detection can be learned. Through extensive experiments, we demonstrate that this method can be even competitive to the state-of-the-art supervised approaches with label information.

Authors:

nan

Abstract:Previous work in continual learning for Named Entity Recognition (NER) relies on the assumption that there exists abundance of labeled data in the new datasets arriving over time. This assumption is usually unrealistic since the token-level annotations required by NER training are laborious and scarce, especially for new (unseen) classes. We present the first work to study continual few-shot learning for NER, which is more general, but as a result, more challenging, compared to continual learning for NER. To alleviate the problem of catastrophic forgetting in continual few-shot learning, we reconstruct synthetic training data of the previously seen classes from the NER model and further develop a framework that distills from the existing model with both synthetic data, and real data from the current training set. Experimental results on several NER benchmarks show that our approach achieves significant improvements over existing baselines.

Authors:

Tal Schuster (MIT CSAIL)*; Adam Fisch (MIT); Tommi Jaakkola (MIT); Regina Barzilay (MIT CSAIL)

Abstract:We develop a novel approach for confidently accelerating inference in the large and expensive multilayer Transformers that are now ubiquitous in natural language processing (NLP). Amortized or approximate computational methods increase efficiency, but can come with unpredictable performance costs. In this work, we present CATs---Confident Adaptive Transformers---in which we simultaneously increase computational efficiency, while \emph{guaranteeing} a specifiable degree of consistency with the original model with high confidence. Our method trains additional prediction heads on top of intermediate layers, and dynamically decides when to stop allocating computational effort to each input using a meta consistency classifier. To calibrate our early prediction stopping rule, we formulate a unique extension of conformal prediction. We demonstrate the effectiveness of this approach on four classification and regression tasks.

Authors:

Irene Li (Yale University)*; Vanessa Yan (Yale University); Dragmir Radev (Yale University)

Abstract:Prerequisite chain learning helps people acquire new knowledge efficiently. While people may quickly determine learning paths over concepts in a domain, finding such paths in other domains can be challenging. We introduce Domain-Adversarial Variational Graph Autoencoders (DAVGAE) to solve this cross-domain prerequisite chain learning task efficiently. Our novel model consists of a variational graph autoencoder (VGAE) and a domain discriminator. The VGAE is trained to predict concept relations through link prediction, while the domain discriminator takes both source and target domain data as input and is trained to predict domain labels. Most importantly, this method only needs simple homogeneous graphs as input, compared with the current state-of-the-art model. We evaluate our model on the LectureBankCD dataset, and results show that our model outperforms recent graph-based benchmarks while using only 1/10 of graph scale and 1/3 computation time.

Authors:

Rongsheng Zhang (Fuxi AI Lab, Netease Inc.)*; Yinhe Zheng (Alibaba Group); Xiaoxi Mao (Fuxi AI Lab, Netease Inc.); Minlie Huang (Tsinghua University)

Abstract:Unsupervised domain adaptation (UDA) with pre-trained language models (LM) has achieved promising results since these pre-trained models embed generic knowledge learned from various domains. However, full fine-tuning of the LM for UDA may lead to learned knowledge being distorted, and the full fine-tuned LM is also expensive for deployment. This paper explores an adapter-based fine-tuning approach for unsupervised domain adaptation. Specifically, several trainable adapter modules are inserted in a pre-trained LM, and the embedded generic knowledge is preserved by fixing the parameters of the original LM at fine-tuning. A domain-fusion scheme is introduced to train these adapters using a corpus from mixed domains to capture transferable features better. Elaborated experiments on two benchmark datasets are carried out, and the results demonstrate that our approach is effective with different tasks, dataset sizes, and domain similarities.

Authors:

Tasnima Sadekova (Huawei Noah's Ark Lab); Vadim Popov (Huawei Noah's Ark Lab); Vladimir Gogoryan (Huawei Noah's Ark Lab; Higher School of Economics); Ivan Vovk (Huawei Noah's Ark Lab; Higher School of Economics); Mikhail Kudinov (Huawei Noah's Ark Lab)*

Abstract:Recent advances in neural text-to-speech allowed to build multi-speaker systems capable of performing high-fidelity speech generation. However, it is often desirable to be able to add a new voice to a text-to-speech system based on only a few recordings. In this work, we study several approaches to the design of on-device voice cloning. Starting from a multi-speaker TTS system we improve its quality for a target speaker by fine-tuning the feature generation module on a small speech sample. We compare the performance of a feature generation module based on conventional Tacotron2 with step-wise monotonic attention with the ones based on Non-attentive Tacotron and Glow-TTS. We show that Non-attentive Tacotron significantly outperforms the attention-based model and demonstrate that a compact on-device TTS system of good quality can be obtained using only 1 minute of adaptation data with no more than 200 iterations of SGD corresponding to less than 1 hour of on-device training time on a consumer mobile phone.

Authors:

Tanya G Roosta (Amazon)*; Peyman Passban (Amazon); Ankit R Chadha (Amazon)

Abstract:Training neural machine translation (NMT) models in federated learning (FL) settings could be inefficient both computationally and communication-wise, due to the large size of translation engines as well as the multiple rounds of updates required to train clients and a central server. In this paper, we explore how to efficiently build NMT models in an FL setup by proposing a novel solution. In order to reduce the communication overhead, out of all neural layers we only exchange what we term ``Controller'' layers. Controllers are a small number of additional neural components connected to our pre-trained architectures. These new components are placed in between original layers. They act as liaisons to communicate with the central server and learn minimal information that is sufficient enough to update clients. We evaluated the performance of our models on five datasets from different domains to translate from German into English. We noted that the models equipped with Controllers preform on par with those trained in a central and non-FL setting. In addition, we observed a substantial reduction in the communication traffic of the FL pipeline, which is a direct consequence of using Controllers. Based on our experiments, Controller-based models are 6 times less expensive than their other peers. This reduction is significantly important when we consider the number of parameters in large models and it becomes even more critical when such parameters need to be exchanged for multiple rounds in FL settings.

Authors:

piotr tarasiewicz (UCL); sultan kenjeyev (UCL); ilana sebag (UCL)*; shehab alshehabi (ucl)

Abstract:The recent emergence of deep learning methods has enabled the research community to achieve state-of-the art results in several domains including natural language processing. However, the current robocall system remains unstable and inaccurate: text generator and chat-bots can be tedious and misunderstand human-like dialogue. In this work, we study the performance of two models able to enhance an intelligent conversational agent through adversarial conversational shaping: a generative adversarial network with policy gradient (GANPG) and a generative adversarial network with reward for every generation step (REGS) based on the REGS model presented in Li et al.. This model is able to assign rewards to both partially and fully generated text sequences. We discuss performance with different training details : seq2seq and transformers in a reinforcement learning framework.

Authors:

Shentong Mo (Carnegie Mellon University)*; Jingfei Xia (Carnegie Mellon University ); Ihor Markevych (Carnegie Mellon University)

Abstract:Visual and linguistic pre-training aims to learn vision and language representations together, which can be transferred to visual-linguistic downstream tasks. However, there exists semantic confusion between language and vision during the pre-training stage. Moreover, current pre-trained models tend to take lots of computation resources for fine-tuning when transferred to downstream tasks. In this work, we present a simple but effective approach for Adaptive Fine-tuning of Vision and Language pre-trained models, namely AFVL. Specifically, we introduce a pair-wise contrastive loss to learn alignments between the whole sentence and each image in the same batch during the pre-training process. At the fine-tuning stage, we introduce two lightweight adaptation networks to reduce model parameters and increase training speed for saving computation resources. We evaluate our CAVL on four main downstream tasks, including Visual Question Answering (VQA), Visual Commonsense Reasoning (VCR), Natural Language for Visual Reasoning (NLVR), and Region-to-Phrase Grounding (RPG). Compared to previous methods, our AFVL achieves comparable or better results while saving training time and GPU memory by a large margin for fine-tuning. Extensive experiments and ablation studies demonstrate the efficiency of contrastive pre-training and adaptive fine-tuning proposed in our AFVL.

Authors:

Shira Guskin (Intel)*; Moshe Wasserblat (Intel); Ke Ding (Intel); Gyuwan Kim ()

Abstract:Limited computational budgets often prevent transformers from being used in production and from having their high accuracy utilized. TinyBERT addresses the computational efficiency by self-distilling BERT into a smaller transformer representation having fewer layers and smaller internal embedding. However, TinyBERT's performance drops when we reduce the number of layers by 50\%, and drops even more abruptly when we reduce the number of layers by 75\% for advanced NLP tasks such as span question answering. Additionally, a separate model must be trained for each inference scenario with its distinct computational budget. In this work we present Dynamic-TinyBERT, a TinyBERT model that utilizes sequence-length reduction and Hyperparameter Optimization for enhanced inference efficiency per any computational budget. Dynanic-TinyBERT is trained only once, performing on-par with BERT and achieving an accuracy-speedup trade-off superior to any other efficient approaches (up to 3.3x with <1\% loss-drop). Upon publication, the code to reproduce our work will be open-sourced.

Authors:

Ravi Teja Gadde (Amazon)*; Ivan Bulyko (Amazon)

Abstract:Neural language models (LM) trained on diverse corpora are known to work well on previously seen entities, however, updating these models with dynamically changing entities such as place names, song titles and shopping items requires re-training from scratch and collecting full sentences containing these entities. We aim to address this issue, by introducing entity-aware language models (EALM), where we integrate entity models trained on catalogues of entities into the pre-trained LMs. Our combined language model adaptively adds information from the entity models into the pre-trained LM depending on the sentence context. Our entity models can be updated independently of the pre-trained LM, enabling us to influence the distribution of entities output by the final LM, without any further training of the pre-trained LM. We show significant perplexity improvements on task-oriented dialogue datasets, especially on long-tailed utterances, with an ability to continually adapt to new entities (to an extent).

Authors:

Aashiq Muhamed (Amazon)*; Iman Keivanloo (Amazon); Sujan Perera (Amazon); James A Mracek (Amazon); Yi Xu (Amazon); Qingjun Cui (Amazon); Santosh Rajagopalan (Amazon); Belinda Zeng (Amazon); Trishul A Chilimbi (Amazon)

Abstract:While pre-trained large language models (LLM) like BERT have achieved state-of-the-art in several NLP tasks, their performance on tasks with additional grounding e.g. with numeric and categorical features is less studied. In this paper, we study the application of pre-trained LLM for Click-through-rate (CTR) prediction for product advertisement in e-commerce. This is challenging because the model needs to a) learn from language as well as tabular data features, b) maintain low-latency (<5 ms) at inference time, and c) adapt to constantly changing advertisement distribution. We first show that scaling the pre-trained language model to 1.5 billion parameters significantly improves performance over conventional CTR baselines. We then present CTR-BERT, a novel lightweight cache-friendly factorized model for CTR prediction that consists of twin-structured BERT-like encoders for text with a mechanism for late fusion for text and tabular features. We train the CTR-BERT model using cross-architecture knowledge distillation (KD) and empirically study the interaction between KD and distribution shift in this setting, by a) experimenting with pre-training, distillation pre-finetuning and fine-tuning strategies b) factorizing features based on their distribution shift time scales, that allows the model to readily adapt and be re-trained. Finally, we show that CTR-BERT significantly outperforms a traditional CTR baseline with a 2.3\% relative ROC-AUC lift in offline experiments and a 2\% CTR lift in an online experiment.

Authors:

Xuanli He ( Monash University)*; Iman Keivanloo (Amazon); Yi Xu (Amazon); Xiang He (Amazon); Belinda Zeng (Amazon); Santosh Rajagopalan (Amazon); Trishul A Chilimbi (Amazon)

Abstract:Pre-training and then fine-tuning large language models is commonly used to achieve state-of-the-art performance in natural language processing (NLP) tasks. However, most pre-trained models suffer from low inference speed. Deploying such large models to applications with latency constraints is challenging. In this work, we focus on accelerating the inference via conditional computations. To achieve this, we propose a novel idea, Magic Pyramid (MP), to reduce both width-wise and depth-wise computation via token pruning and early exiting for Transformer-based models, particularly BERT. The former manages to save the computation via removing non-salient tokens, while the latter can fulfill the computation reduction by terminating the inference early before reaching the final layer, if the exiting condition is met. Our empirical studies demonstrate that compared to previous state of arts, MP is not only able to achieve a speed-adjustable inference, but also to surpass token pruning and early exiting by reducing up to 70% giga floating point operations (GFLOPs) with less than 0.5% accuracy drop. Token pruning and early exiting express distinctive preferences to sequences with different lengths. However, MP is capable of achieving an average of 8.06x speedup on two popular text classification tasks, regardless of the sizes of the inputs.

Authors:

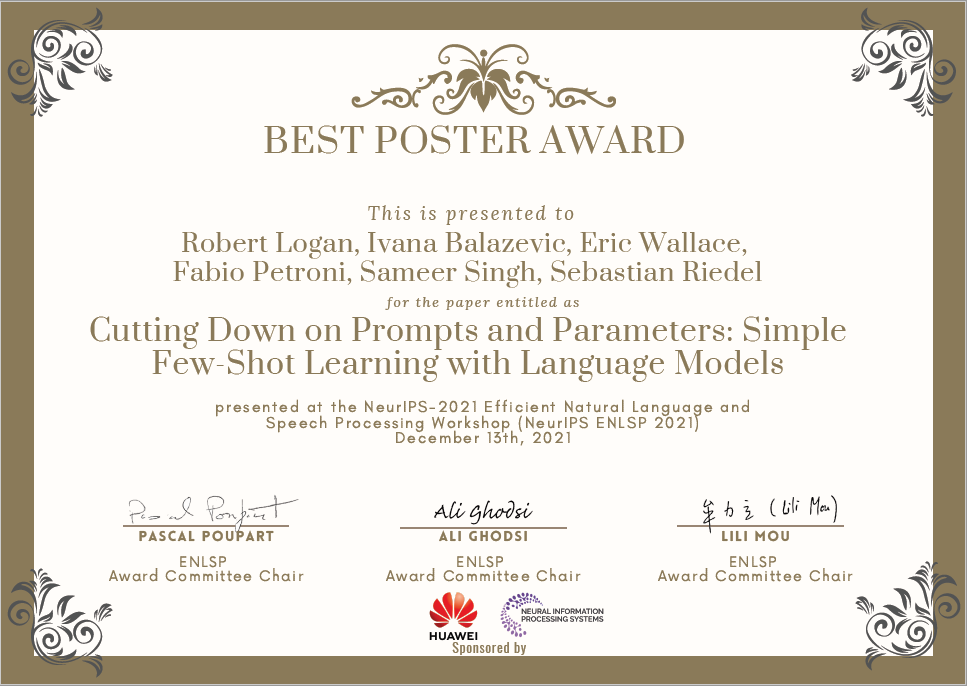

Robert L Logan (UC Irvine)*; Ivana Balazevic (University of Edinburgh); Eric Wallace (U.C. Berkeley); Fabio Petroni (Facebook AI Research); Sameer Singh (University of California, Irvine); Sebastian Riedel ()

Abstract:Prompting language models (LMs) with training examples and task descriptions has been seen as critical to recent successes in few-shot learning. In this work, we show that finetuning LMs in the few-shot setting can considerably reduce the need for prompt engineering. In fact, one can use null prompts, prompts that contain neither task-specific templates nor training examples, and achieve competitive accuracy to manually-tuned prompts across a wide range of tasks. While finetuning LMs does introduce new parameters for each downstream task, we show that this memory overhead can be substantially reduced: finetuning only the bias terms can achieve comparable or better accuracy than standard finetuning while only updating 0.1% of the parameters. All in all, we recommend finetuning LMs for few-shot learning as it is more accurate, robust to different prompts, and can be made nearly as efficient as using frozen LMs.

Authors:

Tianda Li (Noah's ark lab (Montreal))*; Yassir El Mesbahi (Huawei); Ivan Kobyzev (Huawei Noah's Ark Lab); Ahmad Rashid (Huawei Noah's Ark Lab); Atif A Mahmud (Huawei Noah's Ark Lab); Nithin Anchuri (Huawei Noah's Ark Lab); Habib Hajimolahoseini (Huawei Toronto Research Centre); Yang Liu (Huawei Canada); Mehdi Rezagholizadeh (Huawei Noah's Ark Lab)

Abstract:Pre-trained Language Models (PLMs) have been successful for a wide range of natural language processing (NLP) tasks. The state-of-the-art of PLMs, however, are extremely large to be used on edge devices. As a result, the topic of model compression has attracted increasing attention in the NLP community. Most of the existing works focus on compressing encoder-based models (tiny-BERT, distilBERT, distilRoBERTa, etc), however, to the best of our knowledge, the compression of decoder-based models (such as GPT-2) has not been investigated much. Our paper aims to fill this gap. Specifically, we explore two directions: 1) we employ current state-of-the-art knowledge distillation techniques to improve fine-tuning of DistilGPT-2. 2) we pre-train a compressed GPT-2 model using layer truncation and compare it against the distillation-based method (DistilGPT2). The training time of our compressed model is significantly less than DistilGPT-2, but it can achieve better performance when fine-tuned on downstream tasks. We also demonstrate the impact of data cleaning on model performance.

Authors:

Thomas Bohnstingl (IBM Research)*; Ayush Garg (IBM Research Zürich); Stanisław Woźniak (IBM Research); George Saon (IBM); Evangelos Eleftheriou (IBM Research); Angeliki Pantazi (IBM Research)

Abstract:Automatic speech recognition (ASR) is a capability which enables a program to process human speech into a written form. Recent developments in artificial intelligence (AI) have led to high-accuracy ASR systems based on deep neural networks, such as the recurrent neural network transducer (RNN-T). However, the core components and the performed operations of these approaches depart from the powerful biological counterpart, i.e., the human brain. On the other hand, the current developments in biologically-inspired ASR models, based on spiking neural networks (SNNs), lag behind in terms of accuracy and focus primarily on small scale applications. In this work, we revisit the incorporation of biologically-plausible models into deep learning and we substantially enhance their capabilities, by taking inspiration from the diverse neural and synaptic dynamics found in the brain. In particular, we introduce neural connectivity concepts emulating the axo-somatic and the axo-axonic synapses. Based on this, we propose novel deep learning units with enriched neuro-synaptic dynamics and integrate them into the RNN-T architecture. We demonstrate for the first time, that a biologically realistic implementation of a large-scale ASR model can yield competitive performance levels compared to the existing deep learning models. Specifically, we show that such an implementation bears several advantages, such as a reduced computational cost and a lower latency, which are critical for speech recognition applications.

Authors:

Habib Hajimolahoseini (Huawei Toronto Research Centre)*; Mehdi Rezagholizadeh (Huawei Technologies); Vahid Partovi Nia (Huawei Noah's Ark Lab); Marzieh Tahaei (Huawei Noah's Ark Lab); Omar A.M.A. Mohamed Awad (Huawei Technologies); Yang Liu (Huawei Canada)

Abstract:In this paper, a progressive low rank decomposition method is used to compress large-scale pre-trained transformer based language models. To this end, each fully-connected layers of the transformer modules are decomposed into two consecutive smaller ones using a progressive Singular Value Decomposition technique. In contrast to many of state-of-the-art compression methods where intensive pre-training of the compressed model is necessary, progressive LRD can provide promising performance by compressing the model in the fine-tuning stage. Furthermore, the current state-of-the-art model compression techniques usually face a limitation in their compression ratio as the accuracy gap becomes significant with compression ratios higher than 2×. We show that in later steps of the iterative compression where the decomposed models becomes much smaller than their original (compression factors larger than 8×), Knowledge Distillation can also be used to improve the performance.

Authors:

Tong Yu (Adobe Research); Junda Wu (New York University); Ruiyi Zhang (Adobe Research); Handong Zhao (Adobe Research); Shuai Li (Shanghai Jiao Tong University)*

Abstract:Named Entity Recognition (NER) is an important task, to enable a wide range of NLP applications. The state-of-the-art NER models are based on deep learning and require enough labeled data. In practice, the labeled data for NER is usually limited, as providing accurate labels to the sentences is very time consuming. With an NER model trained on limited labeled data, it is desirable to develop an efficient mechanism to collect data labels and improve the model over time. To achieve this, existing works develop active learning approaches. However, these approaches are usually developed for annotators and assume the annotators will provide the exactly correct labels to each sentence selected to label. In this paper, we propose a simple yet effective user-in-the-loop feedback mechanism to enable end users, instead of annotators, to easily provide labels to the system. We identify counterfactual bias of the data collected by this feedback mechanism. To alleviate the bias and achieve more sample-efficient learning, we further develop a counterfactual NER learning framework. We develop an imputation model to estimate the loss in those non-displayed entity classes. By considering both losses on displayed and non-displayed entity classes, we can efficiently alleviate such display bias in the NER model. With extensive experiments, we validate the effectiveness of our feedback mechanism and learning framework.

Authors:

Patrick Xia (Johns Hopkins University)*; Richard Shin (Microsoft Semantic Machines)

Abstract:The sizes of pretrained language models make them challenging and expensive to use when there are multiple desired downstream tasks. In this work, we adopt recent strategies for model pruning during finetuning to explore the question of whether it is possible to prune a single encoder so that it can be used for multiple tasks. We allocate a fixed parameter budget and compare pruning a single model with a multitask objective against the best ensemble of single-task models. We find that under two pruning strategies (element-wise and rank pruning), the approach with the multitask objective outperforms training models separately when averaged across all tasks, and it is competitive on each individual one. Additional analysis finds that using a multitask objective during pruning can also be an effective method for reducing model sizes for low-resource tasks.

Authors:

Sharath Nittur Sridhar (Intel AI Lab)*; Anthony Sarah (Intel Corporation)

Abstract:In recent times, BERT-based models have been extremely successful in solving a variety of natural language processing (NLP) tasks such as reading comprehension, natural language inference, sentiment analysis, etc. All BERT-based architectures have a self-attention block followed by a block of intermediate layers as the basic building component. However, a strong justification for the inclusion of these intermediate layers remains missing in the literature. In this work we investigate the importance of intermediate layers on the overall network performance of downstream tasks. We show that reducing the number of intermediate layers and modifying the architecture for BERT-Base results in minimal loss in fine-tuning accuracy for downstream tasks while decreasing the number of parameters and training time of the model. Additionally, we use centered kernel alignment and probing linear classifiers to gain insight into our architectural modifications and justify that removal of intermediate layers has little impact on the fine-tuned accuracy.

Authors:

Ofir Zafrir (Intel Labs, Israel)*; Ariel Larey (Intel Labs, Israel); Guy Boudoukh (Intel Labs, Israel); Haihao Shen (Intel); Moshe Wasserblat (Intel Labs, Israel)

Abstract:Transformer-based language models are applied to a wide range of applications in natural language processing. However, they are inefficient and difficult to deploy. In recent years, many compression algorithms have been proposed to increase the implementation efficiency of large Transformer-based models on target hardware. In this work we present a new method for training sparse pre-trained Transformer language models by integrating weight pruning and model distillation. These sparse pre-trained models can be used to transfer learning for a wide range of tasks while maintaining their sparsity pattern. We demonstrate our method with three known architectures to create sparse pre-trained BERT-Base, BERT-Large and DistilBERT. We show how the compressed sparse pre-trained models we trained transfer their knowledge to five different downstream natural language tasks with minimal accuracy loss. Moreover, we show how to further compress the sparse models’ weights to 8bit precision using quantization-aware training. For example, with our sparse pre-trained BERT-Large fine-tuned on SQuADv1.1 and quantized to 8bit we achieve a compression ratio of 40X for the encoder with less than 1% accuracy loss. To the best of our knowledge, our results show the best compression-to-accuracy ratio for BERT-Base, BERT-Large, and DistilBERT.

Authors:

Ali Edalati (Huawei Technologies); Marzieh Tahaei (Huawei Noah's Ark Lab); Ahmad Rashid (Huawei Noah's Ark Lab)*; Vahid Partovi Nia (Huawei Noah's Ark Lab); James J. Clark (McGill University); Mehdi Rezagholizadeh (Huawei Technologies)

Abstract:GPT is an auto-regressive Transformer-based pre-trained language model which has attracted a lot of attention in the natural language processing (NLP) domain due to its state-of-the-art performance in several downstream tasks. The success of GPT is mostly attributed to its pre-training on huge amount of data and its large number of parameters (from 100M to billions of parameters). Despite the superior performance of GPT (especially in few-shot or zero-shot setup), this overparameterized nature of GPT can be very prohibitive for deploying this model on devices with limited computational power or memory. This problem can be mitigated using model compression techniques; however, compressing GPT models has not been investigated much in the literature. In this work, we use Kronecker decomposition to compress the linear mappings of the GPT-2 model. Our Kronecker GPT-2 model (KnGPT2) is initialized based on the Kronecker decomposed version of the GPT-2 model and then is undergone a very light pre-training on only a small portion of the training data with intermediate layer knowledge distillation (ILKD). Finally, our KnGPT2 is fine-tuned on down-stream tasks using ILKD as well. We evaluate our model on both language modeling and General Language Understanding Evaluation benchmark tasks and show that with more efficient pre-training and similar number of parameters, our KnGPT2 outperforms the existing DistilGPT2 model significantly.

Authors:

Marko Stamenovic (Bose)*; Li-Chia Yang (Bose); Nils Westhausen (University of Oldenburg); Carl Jensen (Bose); Alex Pawlicki (Bose)

Abstract:We explore network sparsification strategies with the aim of compressing neural speech enhancement (SE) down to an optimal configuration for a new generation of low power microcontroller based neural accelerator (microNPU's). We examine three unique sparsity structures: weight pruning, block pruning and unit pruning; and discuss their benefits and drawbacks when applied to SE. We focus on the interplay between computational throughput, memory footprint and model quality. Our method supports all three sparsity structures above and jointly learns integer quantized weights along with sparsity, alleviating the need for tedious manual fine-tuning. Additionally, we demonstrate offline magnitude based pruning of integer quantized models as a performance baseline. Although efficient speech enhancement is an active area of research, our work is the first to apply block pruning to SE and the first to address SE model compression in the context of microNPU's. Using weight pruning, we show that we are able to compress an already compact model's memory footprint by a factor of 42X from 3.7MB to 87kB while only losing 0.1 dB SDR in performance. We also show a computational speedup of 6.7X with a corresponding SDR drop of only 0.59 dB SDR using block pruning.

Authors:

Soham D Tiwari (Manipal Institute of Technology, Manipal)*; Kshitiz Lakhotia (Manipal Institute of Technology, Manipal); Manjunath Mulimani (Manipal Institute of Technology Manipal)

Abstract:Sound event detection (SED) in machine listening entails identifying the different sounds in an audio file and identifying the start and end time of a particular sound event in the audio. SED finds use in various applications such as audio surveillance, speech recognition, and context-based indexing and retrieval of data in a multimedia database. However, in real-life scenarios, the audios from various sources are seldom devoid of any interfering noise or disturbance. In this paper, we test the performance of the You Only Hear Once (YOHO) algorithm on noisy audio data. Inspired by the You Only Look Once (YOLO) algorithm in computer vision, the YOHO algorithm can match the performance of the various state-of-the-art algorithms on datasets such as Music Speech Detection Dataset, TUT Sound Event, and Urban-SED datasets but at lower inference times. In this paper, we explore the performance of the YOHO algorithm on the VOICe dataset containing audio files with noise at different sound-to-noise ratios (SNR). YOHO can outperform or at least match the best performing SED algorithms reported in the VOICe dataset paper and make inferences in less time.

|

|